|

|

| (8 intermediate revisions by one other user not shown) |

| Line 1: |

Line 1: |

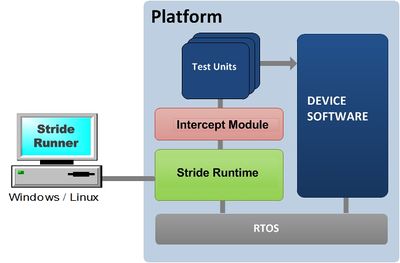

| STRIDE Framework tests (script and native target implementations) are controlled by a host computer, physically connected to the target via a configurable communication channel (TCP/IP or serial port). This article describes some common usage patterns and presents several examples. | | __NOTOC__ |

| | Tests are executed using the [[STRIDE Runner | Stride Runner]] controlled by a host computer that is physically connected to the target via a configurable communication channel (TCP/IP or serial port). The application software is required to be running, including the [[STRIDE Runtime | Stride Runtime]], enabling a ''connection'' between the ''Runner'' and ''Runtime''. |

|

| |

|

| == Prerequisites ==

| | '''Block diagram''' |

|

| |

|

| === Host ===

| |

| Tests are initiated on the host by running the program [[Stride Executable|stride]] on the host computer (installed as part of STRIDE [[Desktop Installation]]). See the linked article for reference information.

| |

|

| |

|

| In order for [[Stride Executable|stride]] to run your tests, the STRIDE database (.sidb) file that was produced as part of the target build must be accessible to the program via the host filesystem. This is trivial to accomplish when the target is built on the host computer (as when building and running tests off-target) or when the host and target computers are running the same operating system and are connected via LAN. But for dissimilar operating systems, you will need to mount the target filesystem on the host computer. (Many of our customers use [http://en.wikipedia.org/wiki/Samba_(software) Samba] to accomplish this.)

| | [[Image:Running_Tests.jpg|400px|Connection Block Diagram]] |

|

| |

|

| === Target ===

| |

| * Target transport configured

| |

| * Target built with STRIDE library and some STRIDE source instrumentation (detials can be found [[Integration Overview|here]]). If any native test units have been written, these must be included in the build also.

| |

| * Target app running <ref>If target is multi-process, both the target and the STRIDE I/O daemon process must be started.</ref>

| |

|

| |

|

| == Running Tests ==

| | '''Invoking the STRIDE Runner (aka <tt>stride</tt>) from a console''' |

| The following command line illustrates how to run some STRIDE tests without publishing results to [[STRIDE Test Space]].

| |

|

| |

|

| We assume the following:

| | stride --database="path/to/YourTestApp.sidb" --device=TCP:localhost:8000 --run="*" |

| * We are testing against a target build that contains the following native code test units: myTest1, myTest2 and myTest3.

| |

| * I also want to run host based tests implemented in a perl test module, myScriptTests.pm.

| |

| * The target device is running, and is available on the LAN via TCP/IP at 192.168.0.53 | |

|

| |

|

| The command line to run the desired tests would be:

| | Option files can be helpful (i.e. my.opt) |

| <pre>

| |

| stride --run "myTest1;myTest2;myTest3" --run myScriptTests.pm --database "/my/path/targetApp.sidb" --device TCP:192.168.0.53:8000

| |

| </pre>

| |

|

| |

|

| A couple of things to note:

| | --database "path/to/YourTestApp.sidb" |

| * If you are running tests of a shared location and others may be running tests using the same referenced database, you should specify a unique output file location (via the -o option) to prevent overwriting the output file.

| | --device TCP:localhost:8000 |

| * If you will be running many tests against a specific database and/or device, consider setting [[Stride_Executable#Environment_Variables|environment variables]] to the corresponding values, or put command line options into an options file (via the -O option).

| |

| * You can refer to the device address using a DNS name if desired (e.g. TCP:testcomputer:8000)

| |

|

| |

|

| === Running All Tests ===

| | stride --options_file my.opt --run "*" |

| By default, stride will not run any tests if <tt>--run</tt> options are not specified. A simple way to run ''all'' target based test units (tests implemented in native code on the device) is to specify the wildcard parameter to stride - specifically:

| |

|

| |

|

| stride --database TestApp.sidb --device TCP:128.0.0.1:8000 --run "*"

| |

|

| |

|

| This will cause all test units that have been captured in the STRIDE database to be executed.

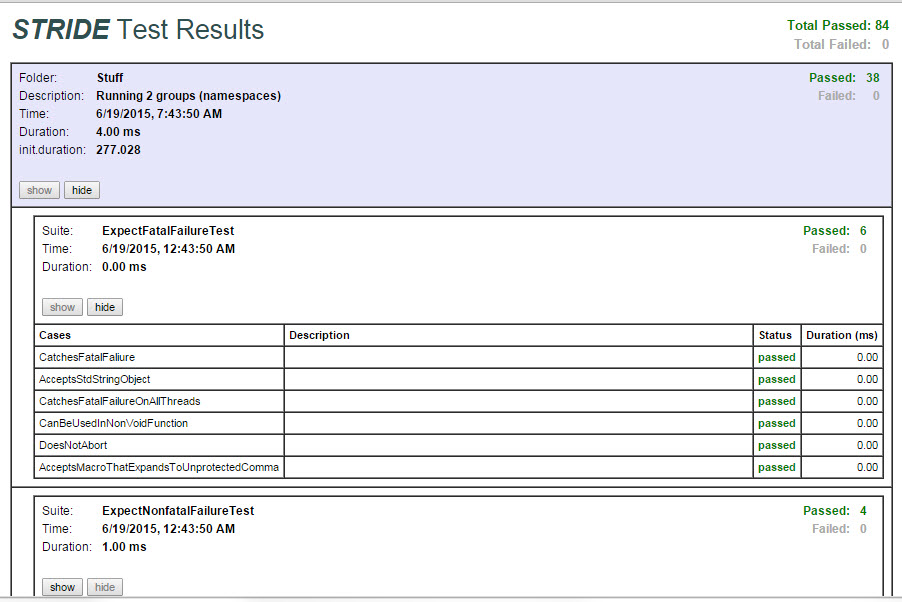

| | '''Reviewing your Results''' |

|

| |

|

| There is no way to run ''all'' perl test modules since we don't capture information about these in the STRIDE database. However, if you organize your test modules in a directory hierarchy, the [[Stride_Runner|runner]] provides a convenient syntax for executing all test modules in a directory - for example:

| | [[Image:Runner_Test_Report.jpg |Example Report]] |

|

| |

|

| stride --database TestApp.sidb --device TCP:128.0.0.1:8000 --run "path/to/my_directory"

| |

|

| |

|

| will recursively search ''my_directory'' for script test modules (identified by file extension) and execute each as it is found.

| | == Input == |

| | In order to run tests, you must provide the following information to stride.exe: |

|

| |

|

| === Running A Subset of Tests ===

| | ;database file |

| The stride test runner allows you to specify a subset of the test units in an existing target build.

| | : This is the .sidb file that is created by the ''Stride Compiler'' during the target build process. This file contains meta-information used to run tests. |

|

| |

|

| For example, assume that you have an .sidb file produced from your target build that has many test units defined. You want to run only three of the test units to reproduce a bug, say. We'll assume that the test units you want to run are named ''MyTest1'', ''MyTest2'', and ''MyTest3''. Further, we'll assume that the environment variable <tt>[[Stride_Runner#Environment_Variables|STRIDE_DEVICE]]</tt> is set to reflect your device parameters so that the <tt>--device </tt> argument can be omitted.

| | ;device parameters |

| | : This defines how to connect to the target. |

|

| |

|

| The following command line will run the three tests in the order shown, putting their results into the root test suite.

| | ;tests to run |

| | : A set of [[Test Unit| Test Units]] compiled in the database. You may also optionally specify named hierarchical suites in which to put the results of the tests you designate. |

|

| |

|

| stride --database TestApp.sidb --run /{MyTest1;MyTest2;MyTest3}

| |

|

| |

|

| Or, if your test results are to be published in the root suite, you can use this simplified syntax:

| | == Output == |

| | Upon test completion, test output is always written as follows: |

|

| |

|

| stride --database TestApp.sidb MyTest1 MyTest2 MyTest3

| | ;console output |

| | : A quick summary of results is written to standard output. Test counts are shown for the categories of '''passed''', '''failed''', '''in progress''', and '''not in use''' |

|

| |

|

| You can automate the running of a subset of test units who's names match a text pattern using the stride runner's [[Listing Test Units]] capability and a small perl script as shown below.

| | ;local xml file |

| | : Detailed results are written to a local xml file. By default, this file is written to the directory where the input Stride database file is located and given the same name as the database file with an extension of ".xml". If you open this file in a web browser, an xsl transform is automatically downloaded and applied before rendering. |

|

| |

|

| <source lang="perl">

| | Optionally, you may also publish the results to [http://www.testspace.com Test Space] upon test completion. |

| while( <> ) {

| |

| if( /myPrefix_*/) {

| |

| print "-r $_";

| |

| }

| |

| }

| |

| </source>

| |

| | |

| The perl script reads from stdin and attempts to match the line to the regular expression <tt>myPrefix_</tt>. If a match is detected, the line is written to stdout prefixed with <tt>-r </tt>,

| |

| | |

| If you save the perl script to a file named myTests.pl, you can automate the running of tests that match your prefix as follows:

| |

| | |

| stride --list --database TestApp.sidb | perl myTests.pl > tests.txt

| |

| stride --database TestApp.sidb --options_file tests.txt

| |

| | |

| == Publishing Test Results ==

| |

| | |

| === Saving Results to the Local Disk ===

| |

| When [[Stride Executable|stride]] is executed to run tests, the following files are written to the current directory. By default, they are named after the specified database. You can specify a different name and location by using the -o option when you run stride. If these files already exist, they are overwritten.

| |

| | |

| ; ''[database_name]''.xml

| |

| : Raw xml test output.

| |

| ; ''[database_name]''.xsl

| |

| : Stylesheet to format the xml file for viewing in a web browser. (''This file is automatically downloaded only if you are connected to the Internet''.)

| |

| | |

| === Displaying Local Results Data ===

| |

| If you open the xml file in a browser, the xsl stylesheet (if present) will be automatically applied to the xml so that the data is rendered in html within the browser.

| |

| | |

| If you are using '''Google Chrome''' please see [[Browser Compatibility]].

| |

| | |

| === Publishing Results to STRIDE Test Space ===

| |

| [[Stride Executable|stride]] can also be instructed to upload test results to [[STRIDE Test Space]].

| |

| === Example: Desktop Testing with Publication to Test Space ===

| |

| This example demonstrates the running of specified target tests followed by publication of the results to [[STRIDE Test Space]].

| |

| | |

| Here, we create an options file since the command line is getting very long. (We could put several of these option values in [[Stride_Executable#Environment_Variables|environment variables]] instead if desired.)

| |

| | |

| The option file is named myOptions.txt, is located in the current directory and contains the following text:

| |

| | |

| <pre>

| |

| --database "/my/path/targetApp.sidb"

| |

| --device TCP:192.168.0.53:8000

| |

| --run "/{myTest1;myTest2; myTest3}"

| |

| --upload

| |

| --testspace username:password@mycompany.stridetestspace.com

| |

| --project "Super Repeater"

| |

| --space "Input Processor"

| |

| --output myoutputfile.xml

| |

| </pre>

| |

| | |

| The command line is then:

| |

| stride --options_file "myOptions.txt"

| |

| | |

| '''Note:''' In order for publishing to succeed, the username/password must be correct and the project and space must already exist. If you are using TestSpace for the first time, you should check with your administrator about how to create projects and spaces.

| |

| | |

| == Publishing Pre-Existing Test Report Files ==

| |

| Stride.exe can be used to upload to Test Space one or more pre-existing report files only (without running tests).

| |

| | |

| To accomplish this specify the publishing-related parameters but do not specify the <tt>--device</tt> parameter or any <tt>--run</tt> parameters when you run stride. The file to be uploaded is specified by the <tt>--output</tt> parameter.

| |

| | |

| If you want aggregate more than one .xml file to a single Test Space result set, run stride once for each file to be uploaded, specifying the <tt>--upload</tt> parameter accordingly.

| |

| | |

| Note that stride will use any environment variables that are in effect, so if you have a non-empty value set for <tt>STRIDE_DEVICE</tt> it must be set to empty in your terminal session before upload-only will be performed. Similarly, environment variables STRIDE_TESTSPACE_URL, STRIDE_TESTSPACE_PROJECT, and STRIDE_TESTSPACE_SPACE will be used if non-empty. (These can be overridden on the command line.)

| |

| | |

| ===Examples===

| |

| ;Upload a single report file

| |

| <pre>

| |

| stride --testspace <nowiki>user:pwd@s2technologies.stridetestspace.com</nowiki> --project Sandbox --space "Tim Space" --name RSName --upload --output test.xml

| |

| </pre>

| |

| | |

| ;Upload multiple report files to a single result set

| |

| :Run stride once for each file to be aggregated. Note argument to <tt>--upload</tt> parameter.

| |

| | |

| <pre>

| |

| stride --testspace <nowiki>user:pwd@s2technologies.stridetestspace.com</nowiki> --project Sandbox --space "Tim Space" --name RSName --upload start --output test1.xml

| |

| | |

| stride --testspace <nowiki>user:pwd@s2technologies.stridetestspace.com</nowiki> --project Sandbox --space "Tim Space" --name RSName --upload add --output test2.xml

| |

| | |

| stride --testspace <nowiki>user:pwd@s2technologies.stridetestspace.com</nowiki> --project Sandbox --space "Tim Space" --name RSName --upload add --output test3.xml

| |

| | |

| stride --testspace <nowiki>user:pwd@s2technologies.stridetestspace.com</nowiki> --project Sandbox --space "Tim Space" --name RSName --upload finish --output test4.xml

| |

| </pre>

| |

| | |

| ==Other Resources==

| |

| A technique to organize tests into suites is shown [[Organizing Tests into Suites|here]].

| |

| | |

| For full details on stride command line parameters, please see [[STRIDE Runner]].

| |

| | |

| == Notes ==

| |

| <references/>

| |

| | |

| [[Category:Running Tests]]

| |