Training Getting Started: Difference between revisions

| (23 intermediate revisions by 3 users not shown) | |||

| Line 9: | Line 9: | ||

The training collateral consists of the following: | The training collateral consists of the following: | ||

# The [[STRIDE_Overview#STRIDE_Framework | STRIDE Framework]] used to implement and execute tests | # The [[STRIDE_Overview#STRIDE_Framework | STRIDE Framework]] used to implement and execute tests | ||

# A set of specific [[# | #* The Framework is configured in an [[STRIDE_Off-Target_Environment | Off-Target Environment]] | ||

# A set of specific [[#Training | Training Modules]] that will guide you through the exercises | |||

# [[Main_Page | ''Wiki articles'']] that will provide background material and other technical information | # [[Main_Page | ''Wiki articles'']] that will provide background material and other technical information | ||

# | # [[STRIDE_Overview#STRIDE_Test_Space | STRIDE Test Space]] | ||

| Line 28: | Line 27: | ||

Before you can start setting up your environment you need the following 3 items: | Before you can start setting up your environment you need the following 3 items: | ||

* '''STRIDE Desktop Installation Package''' (one of the following) | * '''STRIDE Desktop Installation Package''' (one of the following) | ||

**<tt>STRIDE_framework-windows_4.3.yy.zip</tt> (Windows desktop) | |||

**<tt>STRIDE_framework-linux_4.3.yy.tgz</tt> (Linux desktop) | |||

* '''Training Source files''' | * '''Training Source files''' | ||

| Line 36: | Line 34: | ||

* '''Test Space User Account URL and Logon Credentials''' | * '''Test Space User Account URL and Logon Credentials''' | ||

** Test Space URL (<tt> | ** Test Space URL (<tt><i>YourCompany</i>.stridetestspace.com</tt>) | ||

** User-name | ** User-name | ||

** User-password | ** User-password | ||

== Desktop Setup == | === Desktop Setup === | ||

The training requires that you install the [[Desktop_Installation | Desktop Framework]] and that the [[STRIDE_Off-Target_Environment | Off-Target Environment]] is setup correctly and verified. | The training requires that you install the [[Desktop_Installation | Desktop Framework]] and that the [[STRIDE_Off-Target_Environment | Off-Target Environment]] is setup correctly and verified. | ||

| Line 48: | Line 46: | ||

# Install your [[Desktop_Installation | desktop Framework package]] | # Install your [[Desktop_Installation | desktop Framework package]] | ||

# Read about the [[STRIDE_Off-Target_Environment | Off-Target Environment]] | # Read about the [[STRIDE_Off-Target_Environment | Off-Target Environment]] | ||

# Install [[STRIDE_Off-Target_Environment#Host_Compiler | host compiler]] for your desktop | # Install [[STRIDE_Off-Target_Environment#Host_Compiler | host C++ compiler]] for your desktop (if needed) | ||

# Use the Off-Target SDK to [[Building_an_Off-Target_Test_App | build a Test App]] | # Use the Off-Target SDK to [[Building_an_Off-Target_Test_App | build a Test App]] | ||

# [[Building_an_Off-Target_Test_App#Diagnostics | Run STRIDE diagnostics]] with the Test App built | # [[Building_an_Off-Target_Test_App#Diagnostics | Run STRIDE diagnostics]] with the Test App built | ||

== Training Setup == | === Training Setup === | ||

The following source code can be found in the '''STRIDE_training_source.zip''' file: | The following source code can be found in the '''STRIDE_training_source.zip''' file: | ||

| Line 65: | Line 63: | ||

The ''software under test'' is contained in the file '''software_under_test.c | h'''. All of the public functions use '''sut_''' as a prefix. All training modules test against this file. Although the test examples are contained in C++ files, most of the test logic is written in standard C. | The ''software under test'' is contained in the file '''software_under_test.c | h'''. All of the public functions use '''sut_''' as a prefix. All training modules test against this file. Although the test examples are contained in C++ files, most of the test logic is written in standard C. | ||

* Extract STRIDE_training_source.zip into the directory <tt>%STRIDE_DIR%\SDK\Windows\sample_src</tt> (or <tt>$STRIDE_DIR/SDK/Posix/sample_src</tt> for Linux) | |||

* Rebuild the TestApp following the instructions for [[Building_an_Off-Target_Test_App#Build_Steps | Building a TestApp]]. | |||

* List all [[Test_Units | Test Units]] within the generated database file using the following command: | |||

'''Windows''' | '''Windows''' | ||

| Line 94: | Line 92: | ||

TestRuntime_Dynamic(int NumberOfTestCases) | TestRuntime_Dynamic(int NumberOfTestCases) | ||

== | === Run Training Tests === | ||

Here we will run all tests in the <tt>TestApp.sidb</tt> database.<ref>Note that the S2 diagnostic tests are treated separately, and are not run unless the <tt>--diagnostics</tt> option is specified to <tt>stride</tt>.</ref> | |||

* If you haven't done so already, [[Building_an_Off-Target_Test_App#Build_Steps | Build TestApp]] using the SDK makefile | |||

* Invoke TestApp found in the ''/out/bin'' directory | |||

* Create an [[Stride_Runner#Options | option file]] (<tt>myoptions.txt</tt>) in a directory of your choice. | |||

'''Windows''' | |||

##### Command Line Options ###### | |||

--device "TCP:localhost:8000" | |||

--database "%STRIDE_DIR%\SDK\Windows\out\TestApp.sidb" | |||

--output "%STRIDE_DIR%\SDK\Windows\sample_src\TestApp.xml" | |||

--log_level all | |||

'''Linux''' | |||

##### Command Line Options ##### | |||

--device "TCP:localhost:8000" | |||

--database "$STRIDE_DIR/SDK/Posix/out/TestApp.sidb" | |||

--output "$STRIDE_DIR/SDK/Posix/sample_src/TestApp.xml" | |||

--log_level all | |||

As part of the training users implement exercises (test cases). As each exercise is completed, the results are expected to be uploaded to Test Space. Accessing Test Space (uploading, viewing, etc.) requires | A couple of things to note: | ||

* If you set up an [[STRIDE_Runner#Environment_Variables | environment variable]] for the '''device''' option then it is not required in the option file. Note: Command line options override environment variables. | |||

* ''stride --help'' provides [[STRIDE_Runner#Options | options information]] | |||

If you haven't done so already, start <tt>TestApp</tt> running in a separate console window. | |||

Now run stride as shown below and verify summary results: | |||

> stride --options_file myoptions.txt --run "*" | |||

The output should look like this: | |||

<pre> | |||

Loading database... | |||

Connecting to device... | |||

Executing... | |||

test unit "TestBasic" | |||

> 3 passed, 1 failed, 0 in progress, 1 not in use. | |||

test unit "TestDouble_Definition" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestDouble_Reference" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestExpect_Data" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestExpect_Misc" | |||

> 3 passed, 1 failed, 0 in progress, 1 not in use. | |||

test unit "TestExpect_Seq" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestFile" | |||

> 0 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestFixture" | |||

> 1 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestParam" | |||

> 0 passed, 1 failed, 0 in progress, 1 not in use. | |||

test unit "TestRuntime_Dynamic" | |||

> 4 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestRuntime_Static" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

--------------------------------------------------------------------- | |||

Summary: 21 passed, 3 failed, 0 in progress, 11 not in use. | |||

Disconnecting from device... | |||

Saving result file... | |||

</pre> | |||

=== Interpreting Results === | |||

Open '''TestApp.html''' in your browser; this file is created in the directory from which you ran '''stride''' (or at the path specified via the <tt>--output</tt> command line option). | |||

If you're interested in the details of the tests, please see the test documentation contained in the test report. | |||

=== Test Space Access === | |||

As part of the training, users implement exercises (test cases). As each exercise is completed, the results are expected to be uploaded to Test Space. Accessing Test Space (uploading, viewing, etc.) requires a user-name and password. Before working on a training module please confirm that your user account has been set up by [[Test_Space_Setup | logging in]]. | |||

Test Space will have expected results using [[Creating_And_Using_Baselines | baselines]]. This is used to automatically verify if the exercises have been implemented correctly (at least to some degree). For capturing test results a '''Training''' project has been created with the following '''Spaces''': | Test Space will have expected results using [[Creating_And_Using_Baselines | baselines]]. This is used to automatically verify if the exercises have been implemented correctly (at least to some degree). For capturing test results a '''Training''' project has been created with the following '''Spaces''': | ||

Sandbox - Results for training setup | |||

TestBasic - Basics training results: TestBasic.cpp | h | TestBasic - Basics training results: TestBasic.cpp | h | ||

TestParam - Parameters training results: TestParam.cpp | h | TestParam - Parameters training results: TestParam.cpp | h | ||

| Line 111: | Line 180: | ||

To publish your results using the [[STRIDE_Runner | STRIDE Runner]] the following command-line options should be used: | To publish your results using the [[STRIDE_Runner | STRIDE Runner]] the following command-line options should be used: | ||

--testspace <nowiki> | --testspace <nowiki>username:password@yourcompany.stridetestspace.com</nowiki> | ||

--project Training | --project Training | ||

--space ''MODULENAME'' | --space ''MODULENAME'' | ||

| Line 121: | Line 190: | ||

* If you access the Internet via an HTTP proxy please read [[STRIDE_Runner#Using_a_Proxy | '''this article''']] | * If you access the Internet via an HTTP proxy please read [[STRIDE_Runner#Using_a_Proxy | '''this article''']] | ||

== | To make it easier for now we recommend that you update your existing option file (<tt>myoptions.txt</tt>) with the following: | ||

#### Test Space options (partial) ##### | |||

#### Note - make sure to change username, etc. #### | |||

--testspace <nowiki>username:password@yourcompany.stridetestspace.com</nowiki> | |||

--project Training | |||

--name YOURNAME | |||

=== Publish Test Space Results === | |||

To complete the setup publish your results to Test Space. Please make sure to use '''Sandbox''' as the space to upload your results to (see below). | |||

> stride --options_file myoptions.txt --run "*" --space Sandbox --upload | |||

Loading database... | |||

Connecting to device... | |||

Executing... | |||

test unit "TestBasic" | |||

> 3 passed, 1 failed, 0 in progress, 1 not in use. | |||

test unit "TestDouble_Definition" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestDouble_Reference" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestExpect_Data" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestExpect_Misc" | |||

> 3 passed, 1 failed, 0 in progress, 1 not in use. | |||

test unit "TestExpect_Seq" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestFile" | |||

> 0 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestFixture" | |||

> 1 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestParam" | |||

> 0 passed, 1 failed, 0 in progress, 1 not in use. | |||

test unit "TestRuntime_Dynamic" | |||

> 4 passed, 0 failed, 0 in progress, 1 not in use. | |||

test unit "TestRuntime_Static" | |||

> 2 passed, 0 failed, 0 in progress, 1 not in use. | |||

--------------------------------------------------------------------- | |||

Summary: 21 passed, 3 failed, 0 in progress, 11 not in use. | |||

Disconnecting from device... | |||

Saving result file... | |||

Uploading to test space... | |||

=== Confirming Setup === | |||

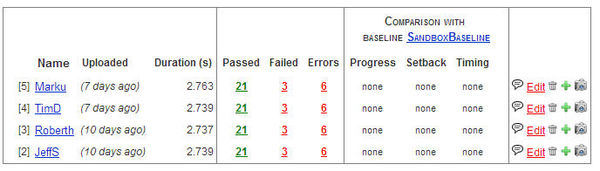

The | After you have run and uploaded the results of the training setup, you should confirm the correctness of your work. The training setup results will end up with a mix of passes and failures, so we use a [[Creating_And_Using_Baselines | Test Space baseline]] to get an aggregate comparison between expected and actual results. | ||

To validate your | To validate your setup: | ||

# Navigate to | # Navigate to Sandbox set in Test Space and look under the column labeled '''''SEQUENTIAL COMPARISON''''' | ||

# Confirm that '''''none''''' is indicated for each of the sub-column. | # Confirm that '''''none''''' is indicated for each of the sub-column. If so, you have completed the setup and are ready to move onto the training modules. | ||

[[image: | [[image:Training_Confirming_Setup.jpg|none|600px| Test Space baseline example]] | ||

== | == Training == | ||

At this point you should be ready to start the actual training. There are '''7 separate training modules''' and we recommend the following order: | At this point you should be ready to start the actual training. There are '''7 separate training modules''' and we recommend the following order: | ||

| Line 146: | Line 259: | ||

* [[Training_Doubling | '''Doubling''']] - Replacing a dependency with a stub, fake, or mock | * [[Training_Doubling | '''Doubling''']] - Replacing a dependency with a stub, fake, or mock | ||

== Training Confirmation == | |||

As you run and upload each test unit containing your worked training exercises, you should confirm the correctness of your work. | |||

The correctly worked training test units will end up with a mix of passes and failures, so we use a [[Creating_And_Using_Baselines | Test Space baseline]] to get an aggregate comparison between expected and actual results | |||

To validate your work: | |||

# Navigate to your result set in Test Space and look under the column labeled '''''SEQUENTIAL COMPARISON''''' | |||

# Confirm that '''''none''''' is indicated for each of the sub-column. | |||

==Notes== | |||

<references/> | |||

[[Category: Training]] | [[Category: Training]] | ||

Latest revision as of 19:01, 8 September 2015

Overview

Our training approach is based on a self-guided tour of the STRIDE Testing Features using reference examples and assigned exercises. The set of examples and the implemented exercises will be built and executed using a standard desktop computer.

The software under test, reference examples, and exercises have been designed to be as simple as possible while sufficiently demonstrating the topic at hand. In particular, the software under test is very light on core application logic -- the focus instead is on the test code that leverages STRIDE to define and execute tests.

The user is expected to work through each of the training modules covering a specific testing feature. Once the exercises are completed (actual test cases implemented), the results are published to Test Space (and validated against a pre-created baseline).

The training collateral consists of the following:

- The STRIDE Framework used to implement and execute tests

- The Framework is configured in an Off-Target Environment

- A set of specific Training Modules that will guide you through the exercises

- Wiki articles that will provide background material and other technical information

- STRIDE Test Space

For more details concerning STRIDE refer to the following:

- Overview screencast

- What is Unique About STRIDE

- Types of Testing Supported

- Frequently Asked Questions

For questions and support email us

Before Starting

Before you can start setting up your environment you need the following 3 items:

- STRIDE Desktop Installation Package (one of the following)

- STRIDE_framework-windows_4.3.yy.zip (Windows desktop)

- STRIDE_framework-linux_4.3.yy.tgz (Linux desktop)

- Training Source files

- STRIDE_training_source.zip

- Test Space User Account URL and Logon Credentials

- Test Space URL (YourCompany.stridetestspace.com)

- User-name

- User-password

Desktop Setup

The training requires that you install the Desktop Framework and that the Off-Target Environment is setup correctly and verified.

For an overview of the installation steps please refer to this article.

The following steps are required:

- Install your desktop Framework package

- Read about the Off-Target Environment

- Install host C++ compiler for your desktop (if needed)

- Use the Off-Target SDK to build a Test App

- Run STRIDE diagnostics with the Test App built

Training Setup

The following source code can be found in the STRIDE_training_source.zip file:

software_under_test.c | h TestBasic.cpp | h TestParam.cpp | h TestFixture.cpp | h TestExpect.cpp | h TestRuntime.cpp | h TestFile.cpp | h TestDouble.cpp | h

The software under test is contained in the file software_under_test.c | h. All of the public functions use sut_ as a prefix. All training modules test against this file. Although the test examples are contained in C++ files, most of the test logic is written in standard C.

- Extract STRIDE_training_source.zip into the directory %STRIDE_DIR%\SDK\Windows\sample_src (or $STRIDE_DIR/SDK/Posix/sample_src for Linux)

- Rebuild the TestApp following the instructions for Building a TestApp.

- List all Test Units within the generated database file using the following command:

Windows

> stride --database="%STRIDE_DIR%\SDK\Windows\out\TestApp.sidb" --list

Linux

> stride --database="$STRIDE_DIR/SDK/Posix/out/TestApp.sidb" --list

The following remoted Functions and Test Units should be displayed:

Functions

sut_strcpy(char const * input, char * output) : void

strlen(char const * _Str) : size_t

Test Units

TestBasic()

TestDouble_Reference()

TestDouble_Definition()

TestExpect_Seq()

TestExpect_Data()

TestExpect_Misc()

TestFile()

TestFixture()

TestParam(int data1, int data2, char * szString)

TestRuntime_Static()

TestRuntime_Dynamic(int NumberOfTestCases)

Run Training Tests

Here we will run all tests in the TestApp.sidb database.[1]

- If you haven't done so already, Build TestApp using the SDK makefile

- Invoke TestApp found in the /out/bin directory

- Create an option file (myoptions.txt) in a directory of your choice.

Windows

##### Command Line Options ###### --device "TCP:localhost:8000" --database "%STRIDE_DIR%\SDK\Windows\out\TestApp.sidb" --output "%STRIDE_DIR%\SDK\Windows\sample_src\TestApp.xml" --log_level all

Linux

##### Command Line Options ##### --device "TCP:localhost:8000" --database "$STRIDE_DIR/SDK/Posix/out/TestApp.sidb" --output "$STRIDE_DIR/SDK/Posix/sample_src/TestApp.xml" --log_level all

A couple of things to note:

- If you set up an environment variable for the device option then it is not required in the option file. Note: Command line options override environment variables.

- stride --help provides options information

If you haven't done so already, start TestApp running in a separate console window.

Now run stride as shown below and verify summary results:

> stride --options_file myoptions.txt --run "*"

The output should look like this:

Loading database...

Connecting to device...

Executing...

test unit "TestBasic"

> 3 passed, 1 failed, 0 in progress, 1 not in use.

test unit "TestDouble_Definition"

> 2 passed, 0 failed, 0 in progress, 1 not in use.

test unit "TestDouble_Reference"

> 2 passed, 0 failed, 0 in progress, 1 not in use.

test unit "TestExpect_Data"

> 2 passed, 0 failed, 0 in progress, 1 not in use.

test unit "TestExpect_Misc"

> 3 passed, 1 failed, 0 in progress, 1 not in use.

test unit "TestExpect_Seq"

> 2 passed, 0 failed, 0 in progress, 1 not in use.

test unit "TestFile"

> 0 passed, 0 failed, 0 in progress, 1 not in use.

test unit "TestFixture"

> 1 passed, 0 failed, 0 in progress, 1 not in use.

test unit "TestParam"

> 0 passed, 1 failed, 0 in progress, 1 not in use.

test unit "TestRuntime_Dynamic"

> 4 passed, 0 failed, 0 in progress, 1 not in use.

test unit "TestRuntime_Static"

> 2 passed, 0 failed, 0 in progress, 1 not in use.

---------------------------------------------------------------------

Summary: 21 passed, 3 failed, 0 in progress, 11 not in use.

Disconnecting from device...

Saving result file...

Interpreting Results

Open TestApp.html in your browser; this file is created in the directory from which you ran stride (or at the path specified via the --output command line option).

If you're interested in the details of the tests, please see the test documentation contained in the test report.

Test Space Access

As part of the training, users implement exercises (test cases). As each exercise is completed, the results are expected to be uploaded to Test Space. Accessing Test Space (uploading, viewing, etc.) requires a user-name and password. Before working on a training module please confirm that your user account has been set up by logging in.

Test Space will have expected results using baselines. This is used to automatically verify if the exercises have been implemented correctly (at least to some degree). For capturing test results a Training project has been created with the following Spaces:

Sandbox - Results for training setup TestBasic - Basics training results: TestBasic.cpp | h TestParam - Parameters training results: TestParam.cpp | h TestFixture - Fixturing training results: TestFixture.cpp | h TestExpect - Expectations training results: TestExpect.cpp | h TestRuntime - Runtime API training results: TestRuntime.cpp | h TestFile - File IO training results: TestFile.cpp | h TestDouble - Doubling training results: TestDouble.cpp | h

To publish your results using the STRIDE Runner the following command-line options should be used:

--testspace username:password@yourcompany.stridetestspace.com --project Training --space MODULENAME --name YOURNAME

Notes:

- Concerning the MODULENAME option: use the name that corresponds to the training module currently underway (e.g. TestBasic, TestParam, etc.)

- YOURNAME should be set to your name (i.e. JohnD); omit spaces from this string

- If you access the Internet via an HTTP proxy please read this article

To make it easier for now we recommend that you update your existing option file (myoptions.txt) with the following:

#### Test Space options (partial) ##### #### Note - make sure to change username, etc. #### --testspace username:password@yourcompany.stridetestspace.com --project Training --name YOURNAME

Publish Test Space Results

To complete the setup publish your results to Test Space. Please make sure to use Sandbox as the space to upload your results to (see below).

> stride --options_file myoptions.txt --run "*" --space Sandbox --upload

Loading database... Connecting to device... Executing... test unit "TestBasic" > 3 passed, 1 failed, 0 in progress, 1 not in use. test unit "TestDouble_Definition" > 2 passed, 0 failed, 0 in progress, 1 not in use. test unit "TestDouble_Reference" > 2 passed, 0 failed, 0 in progress, 1 not in use. test unit "TestExpect_Data" > 2 passed, 0 failed, 0 in progress, 1 not in use. test unit "TestExpect_Misc" > 3 passed, 1 failed, 0 in progress, 1 not in use. test unit "TestExpect_Seq" > 2 passed, 0 failed, 0 in progress, 1 not in use. test unit "TestFile" > 0 passed, 0 failed, 0 in progress, 1 not in use. test unit "TestFixture" > 1 passed, 0 failed, 0 in progress, 1 not in use. test unit "TestParam" > 0 passed, 1 failed, 0 in progress, 1 not in use. test unit "TestRuntime_Dynamic" > 4 passed, 0 failed, 0 in progress, 1 not in use. test unit "TestRuntime_Static" > 2 passed, 0 failed, 0 in progress, 1 not in use. --------------------------------------------------------------------- Summary: 21 passed, 3 failed, 0 in progress, 11 not in use. Disconnecting from device... Saving result file... Uploading to test space...

Confirming Setup

After you have run and uploaded the results of the training setup, you should confirm the correctness of your work. The training setup results will end up with a mix of passes and failures, so we use a Test Space baseline to get an aggregate comparison between expected and actual results.

To validate your setup:

- Navigate to Sandbox set in Test Space and look under the column labeled SEQUENTIAL COMPARISON

- Confirm that none is indicated for each of the sub-column. If so, you have completed the setup and are ready to move onto the training modules.

Training

At this point you should be ready to start the actual training. There are 7 separate training modules and we recommend the following order:

Introductory

- Basics - Covers basics of implementing and executing test cases

- Parameters - How to pass parameters to a test

- Fixturing - Leveraging setup and teardown featuring

Advanced

- Expectations - Validating code sequencing along with state data

- Runtime API - Using the runtime services to dynamically create test suits / cases

- File IO - Reading and writing files existing on the host

- Doubling - Replacing a dependency with a stub, fake, or mock

Training Confirmation

As you run and upload each test unit containing your worked training exercises, you should confirm the correctness of your work.

The correctly worked training test units will end up with a mix of passes and failures, so we use a Test Space baseline to get an aggregate comparison between expected and actual results

To validate your work:

- Navigate to your result set in Test Space and look under the column labeled SEQUENTIAL COMPARISON

- Confirm that none is indicated for each of the sub-column.

Notes

- ↑ Note that the S2 diagnostic tests are treated separately, and are not run unless the --diagnostics option is specified to stride.